Technology that helps people do their own legal work should be against the law.

That at least is the view of some bar authorities, and the implication of regulatory proposals. Many lawyers think that any form of legal assistance not provided by a licensed attorney is unlawful. One notices the same instinct among law students.

I beg to differ.

Illegal Tech

“Legal tech” has long centered on uses by legal professionals. Few vendors and practitioners take interest in tools people use to deal with their problems outside a lawyer/client relationship. Software services that do things that lawyers regard themselves as exclusively authorized to do are more likely seen as illegal tech. Should they be? Is a machine running software that helps someone do legal work engaged in the “unauthorized practice of law?” Are the software authors and system operators so engaged?

| About the Author |

|---|

Marc Lauritsen is a Massachusetts lawyer and educator with an extensive background in practice, teaching, management, and research. He helps people work more effectively through knowledge systems. He has taught at five law schools. He is president of Capstone Practice Systems. Marc Lauritsen is a Massachusetts lawyer and educator with an extensive background in practice, teaching, management, and research. He helps people work more effectively through knowledge systems. He has taught at five law schools. He is president of Capstone Practice Systems. |

A deep dive into those questions back in 2013 led me to conclude that most software applications are works of authorship that governments can’t legitimately suppress consistent with rights of free expression. My analysis surfaced as Liberty, Justice, and Legal Automata in a symposium volume of the Chicago Kent law review. A version aimed at a different audience – Are We Free to Code the Law – was published in the Communications of the ACM. (These pieces largely overlap, although both contain good material not in the other.)

I returned to these themes in Safe Harbors and Blue Oceans, triggered by suggestions in California that only “state-certified/registered/approved entities” would be permitted to field “technology driven systems” without running the risk of prosecution. More recently, I’ve seen a draft article proposing that certification be used to exempt at least some applications from UPL.

The Counter Argument

My basic take goes like this:

- Characterizing software services as “law practice” is wrongheaded definitionally. Such services bear little resemblance to the work of lawyers. Very few claim to be those of a lawyer, or mislead customers into so thinking, Unless a bar authority has gotten through legislation that declares illegal all law-related help that is not provided by a licensed attorney, many UPL rules are inapplicable.

- As a matter of social policy, suppressing valuable services for needy people because a small number may convey inaccurate or incomplete information is counterproductive. Categorically treating all artificial legal assistance as unauthorized practice seems misguided. Even if few providers are prosecuted, the risk of prosecution deters valuable innovation.

- In any event, software applications are works of expressive authorship that deserve as much protection as self-help books and YouTube videos under our First Amendment. An entire category of software can’t be outlawed just because of the nature of its content.

In Stanley v. Georgia (1969), Justice Thurgood Marshall noted that the First and Fourteenth Amendments protect the right to receive information and ideas (in that case, obscene material) as much as to promulgate them.

If people have the right to consume material of their choice in the privacy of their homes, don’t they have the right to access legal guidance, such as how to get a protective order against domestic violence?

I think my overall analysis holds up pretty well, although it’s received little scholarly attention, let alone judicial adoption. Does the advent of generative AI change the picture? Let’s first consider some of the earlier issues.

Balancing Risks and Benefits

Fundamental rights to create and consume artifacts that dispense knowledge shouldn’t depend on cost-benefit analysis. We tolerate serious harms elsewhere for the sake of freedom of speech. Bad advice is not generally criminalizable. Or even the basis for civil liability.

Bar authorities are understandably concerned about opening the floodgates to deceptive and defective substitutes. Yes, in many situations people with legal needs are best served by professionals with special tools and know-how. Yet views in this area are often bottomed on imagined harms of unregulated legal help software that are overstated.

Sure, people can be hurt if they rely on answers or documents generated by systems that are wrong. That’s a consequence of any free market of ideas. And foregoing UPL prior restraints out of respect for the constitution does not leave us defenseless.

Encouraging someone to believe that they are in a lawyer/client relationship with you when you don’t intend to play that role, or are unable to, of course is a problem. Providers of legal assistance systems that purport to deliver attorney/client style services (without a genuine such relationship) seem legitimately prosecutable for unauthorized practice. Those that don’t (the vast majority) should not be victims of the bar’s overreach.

False and misleading claims of course are also actionable under consumer protection laws. The solution is not to punish providers just for having failed to be “authorized,” but for doing something actually bad.

What about Human Help?

While the fairness and effectiveness of requiring years of specialized education and bar exam passage before folks can legally engage in the practice of law are debatable, I’ve had little trouble accepting occupational rules that limit who can represent others before specialized tribunals like trial courts.

Yet as a progressive with libertarian instincts, I’ve also long felt that if you can help someone deal with a legal problem, you should be able to do so, whether or not you’re a lawyer. Just don’t pretend to be one, or suggest that the special privileges and ethical frameworks of the legal profession are available to those you help. Helping someone do routine legal work is more like whitening teeth as a non-dentist than flying a commercial aircraft as a non-pilot.

Legal Advice From Nonlawyers: Consumer Demand, Provider Quality, And Public Harms, by Rebecca Sandefur, is an eye-opening reminder that “there is demand for legal advice and other services from nonlawyer providers, and such providers can produce services that are as good as or better than those of attorneys.”

The Frontline Justice initiative is dedicated to the proposition that community justice workers are as or more effective than lawyers in helping people to resolve their civil justice issues, and shouldn’t be barred from doing so. That idea is now being tested in several cases involving the Upsolve nonprofit. (See e.g., Can Software Engage in the Unauthorized Practice of Law?, and, NY Nonprofit Upsolve Will Take Right-to-Provide Legal Advice Case to Appeals Court.)

What about Generative AI?

Ed Walters takes up the GenAI developments in a timely piece, Re-Regulating UPL in an Age of AI. He proposes a new framework for regulating software, with a focus on consumer protection, transparency, and competency.

My own earlier analysis rested in part on attributes of software services that distinguish them from human ones, accepting arguendo that the latter were fair game for UPL coverage.

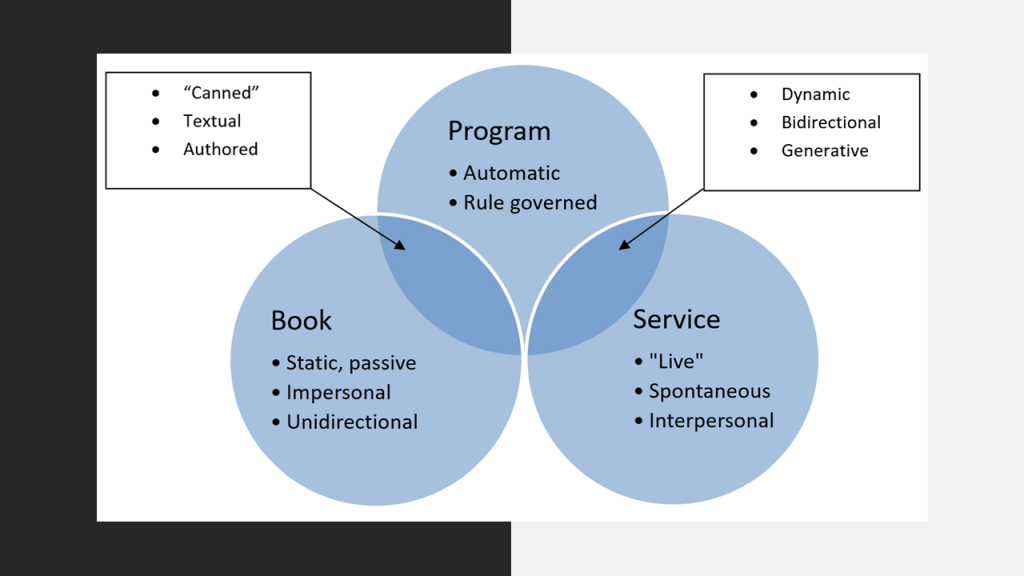

The kinds of systems I focused on already were interactive and generative, like people. But they were written in advance of their usage. They weren’t spontaneous or unpredictable, I argued. Given applications yield the same responses to the same inputs. They are closer to books and similar works of authorship than to human services.

I summarized this in the following diagram:

How do chatbots and other front ends to large language models fare under this analysis? They clearly aren’t “authored,” rule-governed, or deterministic in the same way. They can give different responses to the same prompts.

My 2013 article had this footnote: “[A]s a preview of coming attractions, technological advances may blur this distinction. Some systems will eventually learn and evolve, perhaps in very engagement with a current user, in ways that make it seem that they are no longer fully ‘pre-written’ or pre-determined. And what if a program goes out and conducts research based on a communication from a user? It thus might access and use data that may not have been in the same state, or even available, for a different user at another time.”

A largely machine-learned system, especially one with real-time RAG (retrieval augmented generation), definitely is harder to characterize as a work of individual authorship. Yet it is still a product of human ingenuity, not a human in disguise. As creative expression it deserves protection.

Legal Help By Other Means

in 2015, the U.S. Court of Appeals for the Second Circuit noted that parties in Lola v. Skadden Arps, Slate, Meagher & Flom LLP had agreed in oral argument that those engaging in “tasks that could otherwise be performed entirely by a machine cannot be said to engage in the practice of law.”

Imagine, sometime in the 2030s, that machines have intelligence that is indistinguishable from the best human intelligence. (That does not mean that they will be infallible, or that all machines will agree, even on factual matters.) Will consultation with such agents about legal issues be forbidden? If machines routinely outperform lawyers, will It make sense to let lawyers prohibit their use? Will we only allow artificial lawyers to practice when they are being puppeteered by a responsible human?

Things that do things you used to have to go to a lawyer for should be welcome. Like having an ice cube maker in your refrigerator when folks used to have to wait for an ice man to deliver blocks that had been harvested from a pond.

Maybe only lawyers should be permitted to do things that only lawyers are capable of doing. But that is a small and shrinking set. Outside that realm lawyers should compete on the merits. (If they make smart use of AI, they have a secure future.)

Carving out Exceptions

Certification proposals for legal self-help software give oxygen to the idea that the lawyer monopoly extends by default to all forms of legal help.

Being safely exempt from a potential legal consequence only if you have managed to become certified by some “authority” is de facto prior restraint for those who haven’t, or can’t afford to. Such a regime chills beneficial innovation.

It also frankly seems unadministrable. Quinten Steenhuis and I surveyed the challenges and opportunities around measuring quality in substantive legal applications in a 2019 article. That’s clearly a tough problem! Do bar authorities have the budgets and capacity to operate, or contract with, facilities for testing all proposed applications effectively and fairly? Will providers face a patchwork of state-specific rules and procedures? Will apps need to be re-approved after each code push?

Which is not to say that standards, and maybe even certifications, aren’t highly desirable. Just not as pre-qualification.

Legal apps should be subject to the same general rules, and held to the same general standards, as any other medium that helps people do things. They shouldn’t be treated differently because they offer law-related guidance. Enough with lawyer exceptionalism.

Elbow Room

We should do everything we can to ensure that legal help of high quality is widely available.

Prohibiting such help not involving lawyers or requiring it to get a seal of approval from some ‘authority’ does not advance that goal. Consumers should have choices. Whether the choice in a given situation is wise or optimal should be up to them. Lawyers can deliver exceptional value to those who can afford them. They also can play key roles in educating the public about good and bad sources of legal help. Law schools can help students learn how to assess tech solutions. But we don’t need heavy handed bar authorities elbowing competitors off the field.

Lawyers can be too blinded by protectionism and paternalism to embrace the reality that they simply can’t serve more than a small fraction of those who need help. UPL is a blunt instrument that is wholly unsuited to regulating automated legal advice systems.

Let’s nurture alternative service delivery models, not throttle them. Let’s encourage and fund the good ones.

Fred Rogers advised us, when seeing scary things: “Look for the helpers.” They usually act without permission from the authorities.

Robert Ambrogi Blog

Robert Ambrogi Blog