Want to be as smart as Google’s BERT or Facebook’s LLaMA? Well then, you should keep reading this blog, as it was used to help train them.

With so much attention being paid to the current generation of AI trained on large language models, such as ChatGPT, most of us know little about the text used to train them.

Now, The Washington Post has lifted the cover off this black box. Working with the Allen Institute for AI, it analyzed Google’s C4 data set, “a massive snapshot of the contents of 15 million websites that have been used to instruct some high-profile English-language AIs,” including Google’s T5 and Facebook’s LLaMA.

It then categorized all of those websites (journalism, entertainment, etc.) and ranked them based on how many “tokens” appeared from each data set — with tokens being the bits of text used to process the disorganized information.

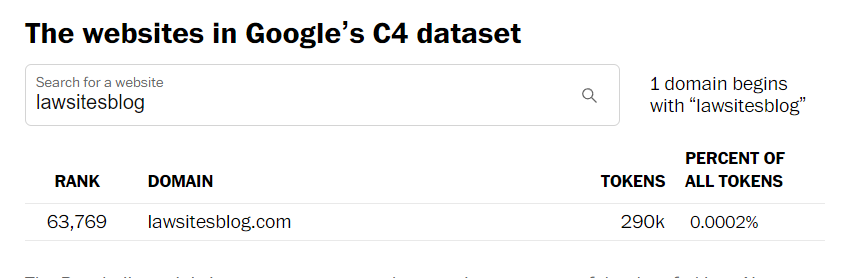

In addition to analyzing all these sites, it then created a searchable database of all the websites in Google’s dataset. As it turns out, this blog is one of them.

LawSites blog ranked 63,769 of all sites used to train the dataset, providing 290,000 tokens, or 0.0002% of all tokens in the dataset.

Of course, LawSites was hardly the only law-related site used to train the data. Based on searches for words such as law, legal, court and case, I found some of the other legal sites that were used. Here is a sampling, listed by their ranks:

- FindLaw Case and Codes, 23.

- Justia Patents, 37.

- U.S. Securities and Exchange Commission, 39.

- Justia Supreme Court, 50.

- Justia U.S. Law, 75.

- Casetext, 124.

- The Legal Information Institute at Cornell, 300.

- Law Insider, a repository of contracts, 649.

- The Virtual Law Library of the Philippines law firm Chan Robles, 856.

- The no-longer-active Law Professor Blogs Network, 1,655.

- Law.com, 5,898.

- American Bar Association, 8,266.

- LexisNexis, 21,045.

- Fastcase, 108,713.

- LexBlog, 110,534.

- My Shingle, 164,557.

- Thomson Reuters, 175,911.

- Legal Evolution, 194,595.

- ILTA, 929,143.

- Bloomberg Law, 11,209,960.

(After publishing this post, it was pointed out to me that the data is broken down by subdomain. So, for example, at least three of the data sets all came from the same source, Justia. I added Justia’s patents and Supreme Court subdomains above. That would mean that, cumulatively, Justia contributed 92 million tokens, which would appear to make it the fifth largest source of data, just after the New York Times.)

You can go in and search for your favorite legal sites and see where they rank. But, clearly, the bottom line is that you should keep reading this blog.

Robert Ambrogi Blog

Robert Ambrogi Blog