How do AI-driven legal research platforms — specifically those that provide direct answers to legal questions — stack up against each other?

That was the question at a Feb. 8 meeting of the Southern California Association of Law Libraries, where a panel of three law librarians reported on their comparison of the AI answers delivered by three leading platforms – Lexis+AI, Westlaw Precision AI, and vLex’s Vincent AI.

While all three platforms demonstrated competency in answering basic legal research questions, the panel found, each showed distinct strengths and occasional inconsistencies in their responses to the legal queries the librarians put to them.

But after testing the platforms using three separate legal research scenarios, the panelists’ broad takeaway was that, while AI-assisted legal research tools can provide quick initial answers, they still should be viewed as starting points rather than definitive sources for legal research.

“This is a starting point, not an ending point,” said Mark Gediman, senior research analyst, Alston & Bird, who was one of the three panelists, stressing the continued importance of using traditional legal research skills to verify the AI’s results.

Evaluating Three Legal Questions

I did not attend the panel. However, I was provided with an audio recording and transcript, together with the slides. This report is based on those materials.

In addition to Gediman, the other two law librarians who compared the platforms and presented their findings were:

- Cindy Guyer, senior knowledge and research analyst, O’Melveny & Myers.

- Tanya Livshits, manager, research services, DLA Piper.

Using each of the platforms, they researched three questions, all focused on California state law or federal law within the 9th U.S. Circuit Court of Appeals, which covers California:

- What is the time frame to seek certification of an interlocutory appeal from the district court in Ninth Circuit?

- Is there a private right of action under the California Reproductive Leave Loss for Employees Act?

- What is the standard for appealing class certification? (California)

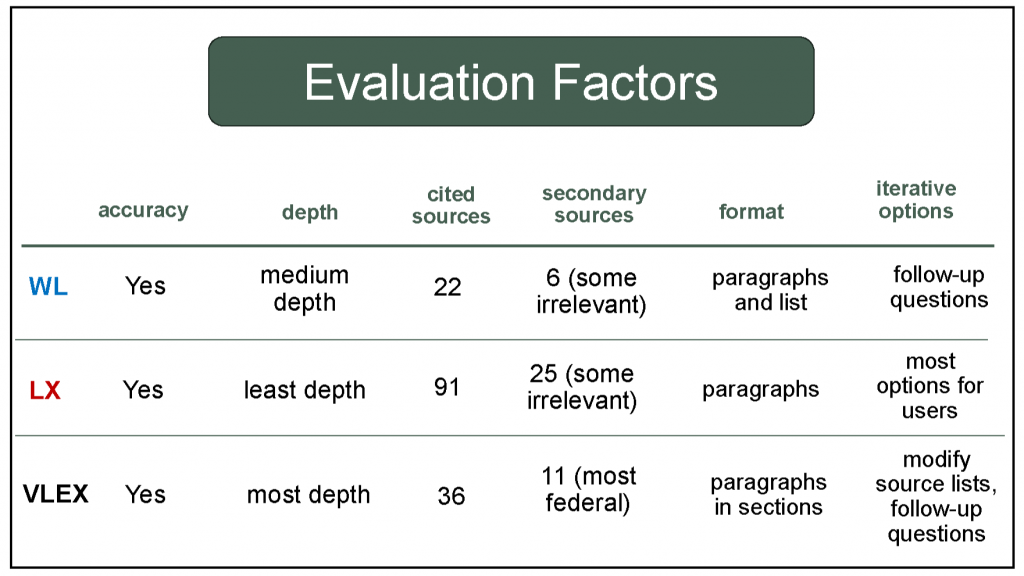

They evaluated the results delivered by each platform using six factors:

- Accuracy of answer.

- Depth of answer.

- Cited primary sources.

- Secondary sources cited.

- Format of answer.

- Iterative options.

First Question: Appeal Timeframe

For the first question, regarding the timeframe for interlocutory appeal certification in the Ninth Circuit, all three platforms identified the correct answer, which is 10 days after entry of the certification order.

The answer given by Lexis+ AI properly included a citation to the controlling federal statute, as well as related case law, and put the citations directly in the text of the answer, as well as listing them separately. When the panelist asked it a follow-up question about how the 10 days is calculated, it gave what she considered to be a good explanation.

Westlaw Precision AI performed pretty much the same as Lexis, answering the question correctly and providing the answer in substantially the same form and citing substantially the same authorities. It also included the citations directly within the text of the answer.

Vincent AI, while also providing the correct answer, cited different cases than the Lexis and Westlaw. It also showed some inconsistency in how it presented authorities, with the key governing statute appearing in the answer but not in the accompanying list of legal authorities.

Second Question: Private Right of Action

The second question, concerning private a right of action under California’s 2024 Reproductive Leave Loss Act, revealed more significant differences among the platforms. This question was particularly challenging, panelist Gediman said, in that it involved legislation that took effect just over a year ago, on Jan. 1, 2024.

Lexis+ AI and Westlaw Precision AI reached similar conclusions, both finding no explicit private right of action. However, Vincent AI reached the opposite conclusion, finding that there is a private right of action. Notably, it found a relevant regulatory provision that the other platforms missed. In arriving at its answer, it also interpreted statutory language in a way that the panelist said was “an arguable assumption but not explicitly stated.”

“Now that does not mean that Westlaw and Lexis were wrong and Vincent was right or vice versa,” Gediman said. “It just points out the fact that AI is tricky, and … it doesn’t matter … how many times you put a question in, each answer is going to be a little bit different each time you get it, even if it’s on the same system.”

Still, Gediman was impressed with Vincent’s performance on this question, finding relevant language in a regulation that was not specific to the law in question.

“It managed … to find relevancy in a slightly broader frame of reference to apply, and I thought that was pretty, pretty cool,” Gediman said. “I wouldn’t have found this on my own, quite honestly, and I like to think I’m a pretty decent researcher.”

Third Question: Standard of Appeal

For the third question, regarding the standard for appealing class certification in California, all three AI tools correctly identified the abuse of discretion standard, but their presentations varied significantly. A key difference among them related to something called the “death knell” doctrine, which requires denials of class actions in California to be appealed immediately.

On this question, Lexis+ AI provided the correct answer as to the standard, and Guyer, the panelist who tested it, thought the first paragraph of its answer did a good job of setting out the important information, including the factors courts look to in determining abuse of discretion. But she thought subsequent paragraphs became redundant, citing the same cases multiple times.

Importantly, however, the Lexis+ AI answer did not mention the “death knell” issue regarding the need to file an immediate appeal. “That was kind of important to me,” Guyer said.

Westlaw Precision AI also got the standard right and included the crucial warning about the immediate appeal requirement. But Guyer took issue with the way it presented its answer in a list format that could have been confusing to a researcher and might not have alerted them about the death knell issue. She also found that many of the secondary sources cited in support of the answer were not relevant, often drawing on federal law when the question involved a state statute.

Vincent AI offered perhaps the most well-rounded response, Guyer thought, calling it a “great answer.” It provided both a concise initial answer and a detailed explanation, including a unique “exceptions and limitations” section reminiscent of practice guides that highlighted the death knell warning.

Although Vincent’s initial answer also cited to some irrelevant secondary sources, Guyer liked that Vincent has a feature whereby the researcher can check boxes next to sources and eliminate them, and then regenerate the answer based only on the remaining sources. “I love that control that they give the user to be part of this gen AI experience in terms of what you want,” she said.

Guyer also liked that the Vincent AI answer could be exported and shared for collaboration with others. Provided they also have a Vincent AI subscription, they can click on a link and go in and view the full AI answer, as well as manipulate and regenerate the query.

Summing It All Up

The panelists summed up their evaluation of the three platforms with the chart you see below, evaluating each of the six factors I listed above.

In general terms across all three questions, each platform demonstrated distinctive strengths in how they presented and supported their answers, the panelists said.

Lexis+ AI consistently showed strong integration with Shepard’s citations and offered multiple report formats. Westlaw Precision AI’s integration with KeyCite and clear source validation tools made verification straightforward, though the platform’s recent shift to more concise answers was notable in the responses. Vincent AI stood out for its user control features, allowing researchers to filter sources and regenerate answers, as well as its unique relevancy ranking system.

For the panelists, the variations in responses, particularly for the more complex questions and recent legislation, underscored that these AI answer tools should be viewed as starting points rather than definitive sources.

On the topic of vendor transparency, the panelists said that none of the vendors currently disclose which specific AI models they use. While vendors may not share their underlying technology, they have been notably responsive to user feedback and quick to implement improvements, the panelists said.

The panel emphasized that despite advances in AI technology, these tools require careful oversight and validation. “Our users tend to think that AI is the solution to all of their life’s problems,” said panelist Livshits. “I spent a lot of time explaining that it’s a tool, not a solution, and explaining the limitations of it right now.”

Said Gediman: “Whenever I give the results to an attorney, I always include a disclaimer that this should be the beginning of your research, and you should review the results for relevance and applicability prior to using it, but you should not rely on it as is.”

Robert Ambrogi Blog

Robert Ambrogi Blog