Are you still on the fence about whether generative artificial intelligence can do the work of human lawyers? If so, I urge you to read this new study.

Published yesterday, this first-of-its-kind study evaluated the performance of four legal AI tools across seven core legal tasks. In many cases, it found, AI tools can perform at or above the level of human lawyers, while offering significantly faster response times.

The Vals Legal AI Report (VLAIR) represents the first systematic attempt to independently benchmark legal AI tools against a lawyer control group, using real-world tasks derived from Am Law 100 firms.

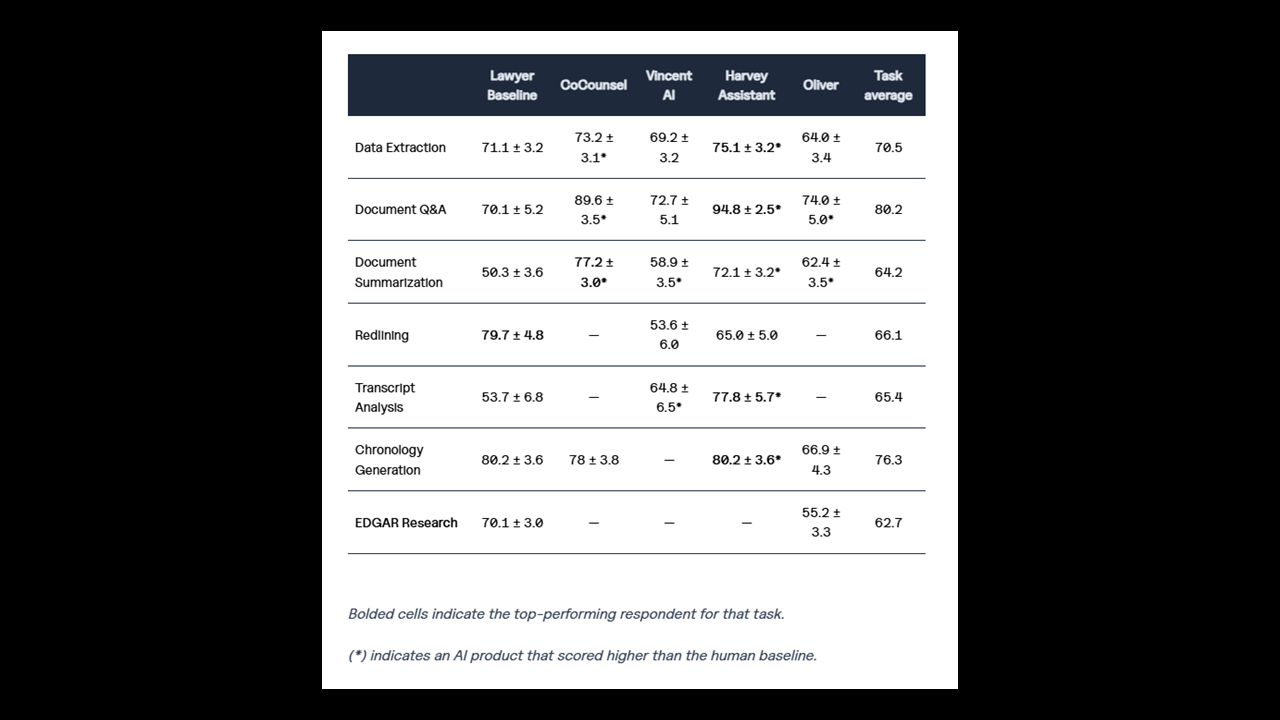

It evaluated AI tools from four vendors — Harvey, Thomson Reuters (CoCounsel), vLex (Vincent AI), and Vecflow (Oliver) — on tasks including document extraction, document Q&A, summarization, redlining, transcript analysis, chronology generation, and EDGAR research.

LexisNexis originally participated in the benchmarking but, after the report was written, it chose to withdraw from all the tasks in which it participated except legal research. The results of the legal research benchmarking will be published in a separate report.

Key Findings

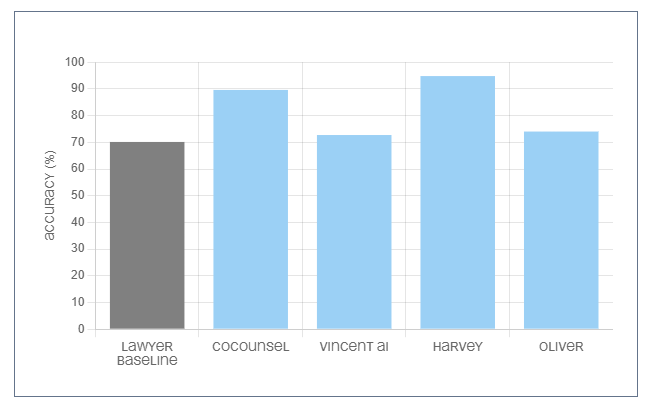

Harvey Assistant emerged as the standout performer, achieving the highest scores in five of the six tasks it participated in, including an impressive 94.8% accuracy rate for document Q&A. Harvey exceeded lawyer performance in four tasks and matched the baseline in chronology generation.

(Each vendor could choose which of the evaluated skills they wished to opt into.)

“Harvey’s platform leverages models to provide high-quality, reliable assistance for legal professionals,” the report said. “Harvey draws upon multiple LLMs and other models, including custom fine-tuned models trained on legal processes and data in partnership with OpenAI, with each query of the system involving between 30 and 1,500 model calls.”

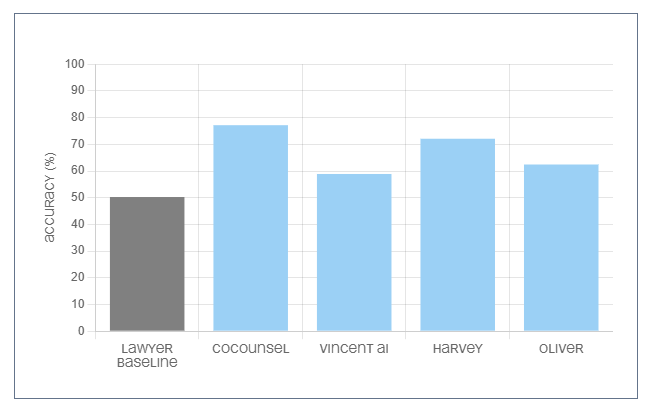

CoCounsel from Thomson Reuters was the only other vendor whose AI tool received a top score — 77.2% for document summarization — and consistently ranked among top-performing tools across all four tasks it participated in, with scores ranging from 73.2% to 89.6%.

The Lawyer Baseline (the results produced by a lawyer control group) outperformed the AI tools on two tasks — EDGAR research (70.1%) and redlining (79.7%), suggesting these areas may remain, for now at least, better suited to be done by humans. AI tools collectively surpassed the Lawyer Baseline on document analysis, information retrieval and data extraction tasks.

Perhaps not surprisingly, the study found a dramatic difference in response times between AI and humans. The report found that AI tools were “six times faster than the lawyers at the lowest end, and 80 times faster at the highest end,” making a strong case for AI tools as efficiency drivers in legal workflows.

“The generative AI-based systems provide answers so quickly that they can be useful starting points for lawyers to begin their work more efficiently,” the report concluded.

Document Q&A produced the highest scores out of any task in the study, leading the report to conclude that it is a task for which lawyers should find value in using generative AI.

The report found that Harvey Assistant was consistently the fastest, with CoCounsel also being “extraordinarily quick,” both providing responses in less than a minute.

But it also said that Vincent AI “gave responses exceptionally quickly as generally one of the fastest products we evaluated.”

Oliver was found to be the slowest, often taking five minutes or more per query. The report said this is likely due to Oliver’s agentic workflow, which breaks tasks into multiple steps.

Vendor-Specific Performance

Harvey, the fastest-growing legal technology startup in the space (having raised over $200 million and achieved unicorn status since its founding in 2022), opted into more tasks than any other vendor and received the highest scores in document Q&A, document extraction, redlining, transcript analysis, and chronology generation.

“Harvey Assistant either matched or outperformed the Lawyer Baseline in five tasks and it outperformed the other AI tools in four tasks evaluated,” the report said. “Harvey Assistant also received two of the three highest scores across all tasks evaluated in the study, for Document Q&A (94.8%) and Chronology Generation (80.2% — matching the Lawyer Baseline).”

CoCounsel 2.0 from Thomson Reuters was submitted for four of the tasks and consistently performed well, the study found, achieving an average score of 79.5% across its four evaluated tasks — the highest average score in the study. It particularly excelled at document Q&A (89.6%) and document summarization (77.2%).

“CoCounsel surpassed the Lawyer Baseline in those four tasks alone by more than 10 points,” the study said.

Vincent AI from vLex participated in six tasks — second only to Harvey in number of tasks — with scores ranging from 53.6% to 72.7%, outperforming the Lawyer Baseline on document Q&A, document summarization, and transcript analysis.

The report said that Vincent AI’s design is particularly noteworthy for its ability to infer the appropriate subskill to execute based on the user’s question, and that the answers it provided were “impressively thorough.”

Oddly (I thought), the report praised Vincent AI for refusing to answer questions when it did not have sufficient data to answer, rather than give a hallucinated answer. But the report said those refusals to answer also negatively affected its scores.

Oliver, released last September from the startup Vecflow, was described in the report as “the best-performing AI tool” on the challenging EDGAR research task. That would seem a given, since it was the only AI tool to participate in that task. It scored 55.2% against the Lawyer Baseline’s 70.1%.

The report highlighted Oliver’s “agentic workflow” approach as potentially valuable for complex research tasks requiring multiple steps and iterative decision-making, and said it excels at explaining its reasoning and actions as it works.

“Oliver bested at least one other product for every task it opted into,” the report said. “Oliver also outperformed the Lawyer Baseline for Document Q&A and Document Summarization.”

Methodology

The study was developed in partnership with Legaltech Hub and a consortium of law firms including Reed Smith, Fisher Phillips, McDermott Will & Emery, and Ogletree Deakins, along with four anonymous firms. The consortium created a dataset of over 500 samples reflecting real-world legal tasks.

Vals AI developed an automated evaluation framework to provide consistent assessment across tasks. The study notes that the lawyer control group was “blind” — participating lawyers were unaware they were part of a benchmarking study and received assignments formatted as typical client requests.

Tara Waters was Vals AI’s project lead for the study.

Future Directions

The report indicates this benchmark is the first iteration of what its says will be a regular evaluation of legal industry AI tools, with plans to repeat this study annually and add others. Future iterations may expand to include more vendors, additional tasks, and coverage of international jurisdictions beyond the current U.S. focus.

“There is growing momentum across the legal industry for standardized methodologies, benchmarking, and a shared language for evaluating AI tools,” the report notes.

Nicola Shaver and Jeroen Plink of Legaltech Hub were credited for their “partnership in conceptualizing and designing the study and bringing together a high-quality cohort of vendors and law firms.”

“Overall, this study’s results support the conclusion that these legal AI tools have value for lawyers and law firms,” the study concludes, “although there remains room for improvement in both how we evaluate these tools and their performance.”

Robert Ambrogi Blog

Robert Ambrogi Blog