At a time when some courts are still questioning or even banning the use of generative artificial intelligence, a recent decision from the District of Columbia Court of Appeals is notable for the fact that both the majority and dissenting opinions openly discussed their use of ChatGPT in their deliberations.

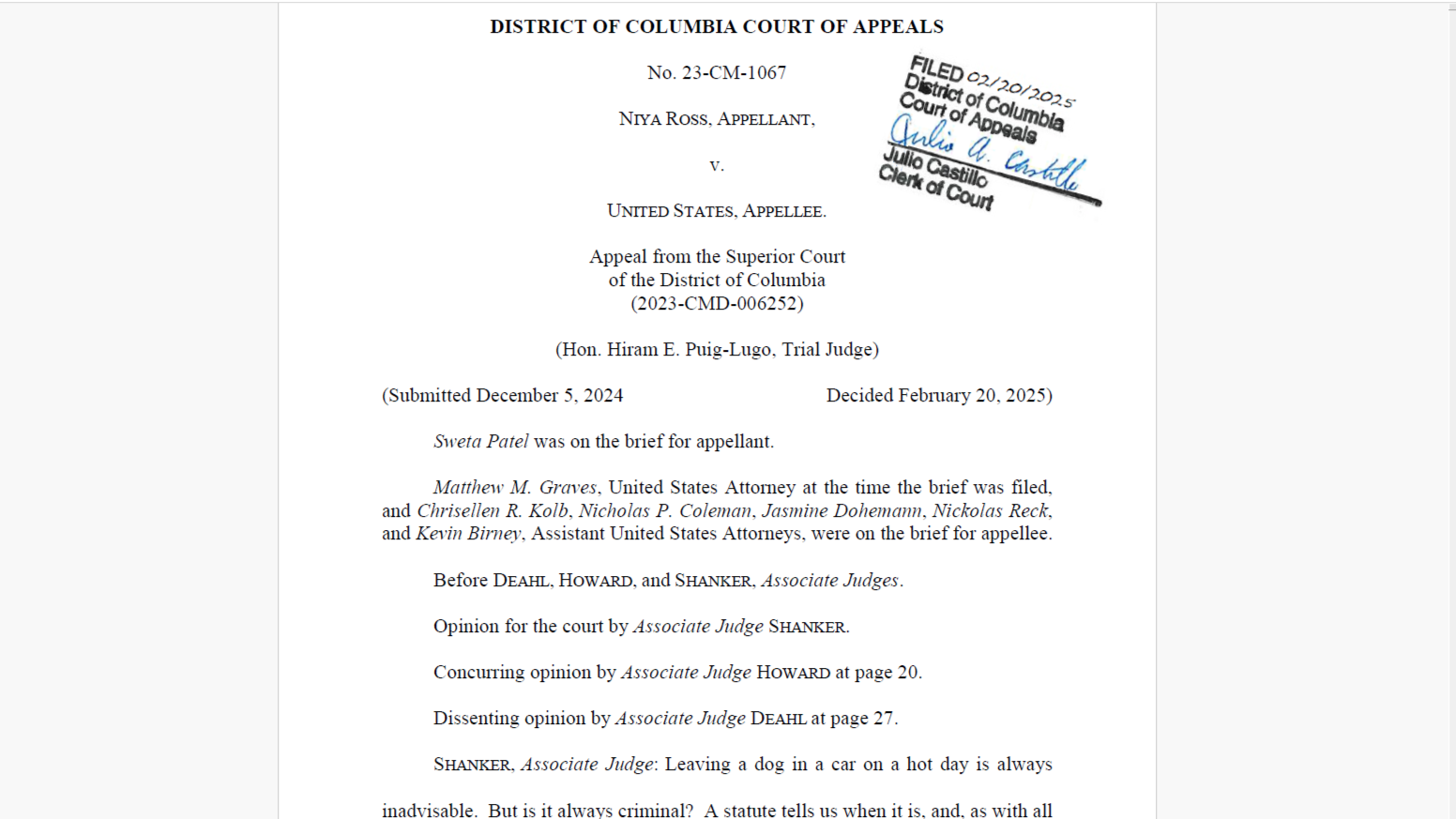

The decision, issued Feb. 20 in the case Ross v. United States, came in an appeal of a matter in which a lower court had convicted Niya Ross of animal cruelty after she left her dog Cinnamon in a car on a 98-degree day.

The opinion is the first by that court to publicly discuss its the use of AI tools in the decision-making, and it was unusual for the fact that both the majority and dissent cited ChatGPT, which in turn led to a concurring opinion written solely to point out and discuss the court’s use of gen AI.

AI Usage in the Opinion

The majority opinion reversed the conviction of Ross after concluding that the government had failed to present sufficient evidence to demonstrate that the circumstances in which the dog was found caused it to suffer.

In dissenting from that decision, Associate Judge Joshua Deahl argued that it was common knowledge that leaving a dog in a car under the circumstances of this case would result in harm.

To bolster that argument and further explore the issue of what constitutes “common knowledge” about the potential harm of leaving a dog in a hot car, he directly consulted ChatGPT, prompting it with the question: “Is it harmful to leave a dog in a car, with the windows down a few inches, for an hour and twenty minutes when it’s 98 degrees outside?”

ChatGPT responded without equivocation: “Yes, leaving a dog in a car under these conditions is very harmful.” It went on to describe the potential dangers, stating that temperatures inside a car could quickly rise to over 120°F, potentially causing heatstroke or being fatal to a dog.

Judge Deahl contrasts ChatGPT’s response to this query to its response to another query he gave it based on the facts of a previous precedent, in which a German Shepherd was left outside for five hours in 25 degree temperatures. When he asked ChatGPT about that, it gave a response that, as the judge put it, “boils down to ‘it depends.'”

From those queries, the judge concluded that the response to the first was the equivalent of “yes beyond a reasonable doubt,” while the response to the second was that the potential of danger to the dog was not beyond a reasonable doubt.

“I think that aligns perfectly with what my own common sense tells me,” he wrote.

‘More Than A Gimmick’

The majority opinion, written by Associate Judge Vijay Shanker, while reversing the conviction, also referenced the AI discussion. In a footnote, the majority noted their skepticism about using ChatGPT as a proxy for common knowledge.

In a concurring opinion, Associate Judge John P. Howard III provided a more comprehensive exploration of AI’s emerging role in the judicial system. He noted that AI tools are “more than a gimmick” and are increasingly coming to courts in various ways.

Judge Howard highlighted what he believes are several critical considerations for judicial AI use:

- Courts must approach AI technology cautiously.

- Specific use cases need careful consideration.

- Potential issues include security, privacy, reliability, and bias..

- Judicial officers must understand what data AI tools collect and how they use it

Against that backdrop, he credited his colleagues for using AI appropriately, particularly in that they did not even inadvertently risk exposing deliberative information.

“It strikes me that the thoughtful use employed by both of my colleagues are good examples of judicial AI tool use for many reasons — including the consideration of the relative value of the results — but especially because it is clear that this was no delegation of decision-making, but instead the use of a tool to aid the judicial mind in carefully considering the problems of the case more deeply,” he wrote, adding: “Interesting indeed.”

Broader Implications

This opinion is not the first in which a judge has publicly discussed his use of gen AI in deciding a case. Last year, 11th U.S. Circuit Court of Appeals Judge Kevin Newsom made news for his 32-page concurring opinion pondering the use of generative AI by courts in interpreting words and phrases.

However, this appears to be at least one of the first published judicial discussions explicitly detailing AI tool usage in legal decision-making. And while the judges used ChatGPT more as a exploratory tool than a decision-making mechanism, the transparency about their AI interaction is notable.

“AI tools are more than a gimmick; they are coming to courts in various ways, and judges will have to develop competency in this technology, even if the judge wishes to avoid using it,” Judge Howard wrote. “Courts, however, must and are approaching the use of such technology cautiously.”

Robert Ambrogi Blog

Robert Ambrogi Blog